[lliveblog][PAIR] Antonio Torralba on machine vision, human vision

At the PAIR Symposium, Antonio Torralba asks why image identification has traditionally gone so wrong.

|

NOTE: Live-blogging. Getting things wrong. Missing points. Omitting key information. Introducing artificial choppiness. Over-emphasizing small matters. Paraphrasing badly. Not running a spellpchecker. Mangling other people’s ideas and words. You are warned, people. |

If we train our data on Google Images of bedrooms, we’re training on idealized photos, not real world. It’s a biased set. Likewise for mugs, where the handles in images are almost all on the right side, not the left.

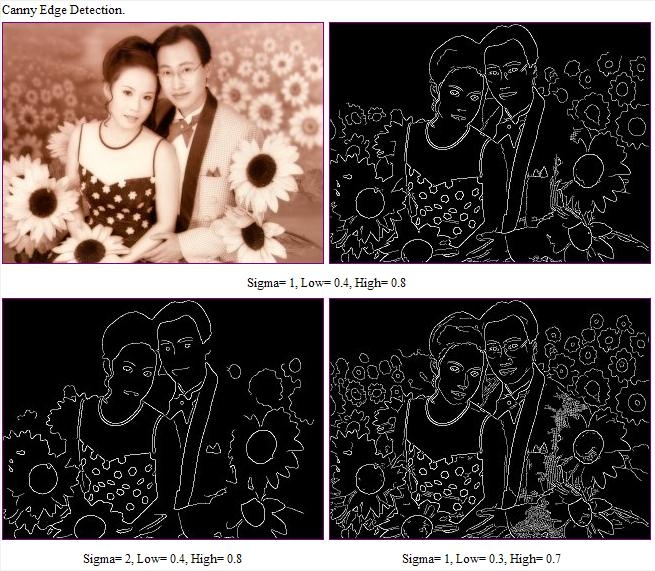

Another issue: The CANNY edge detector (for example) detects edges and throws a black and white reduction to the next level. “All the information is gone!” he says, showing that a messy set of white lines on black is in fact an image of a palace. [Maybe the White House?] (A different example of edge detection:)

/div>

/div>

Deep neural networks work well, and can be trained to recognize places in images, e.g., beach. hotel room, street. You train your neural net and it becomes a black box. E.g., how can it recognize that a bedroom is in fact a hotel room? Maybe it’s the lamp? But you trained it to recognize places, not objects. It works but we don’t know how.

When training a system on place detection, we found some units in some layers were in fact doing object detection. It was finding the lamps. Another unit was detecting cars, another detected roads. This lets us interpret the neural networks’ work. In this case, you could put names to more than half of the units.

How to quantify this? How is the representation being built? For this: Network dissection. This shows that when training a network on places, objects emerges. “The network may be doing something more interesting than your task.”The network may be doing something more interesting than your task: object detection is harder than place detection.

We currently train systems by gathering labeled data. But small children learn without labels. Children are self-supervised systems. So, take in the rgb values of frames of a movie, and have the system predict the sounds. When you train a system this way, it kind of works. If you want to predict the ambient sounds of a scene, you have to be able to recognize the objects, e.g., the sound of a car. To solve this, the network has to do object detection. That’s what they found when they looked into the system. It was doing face detection without having been trained to do that. It also detects baby faces, which make a different type of sound. It detects waves. All through self-supervision.

Other examples: On the basis of one segment, predict the next in the sequence. Colorize images. Fill in an empty part of an image. These systems work, and do so by detecting objects without having been trained to do so.

Conclusions: 1. Neural networks build represntations that are sometimes interpretatble. 2. The rep might solve a task that’s evem ore interesting than the primary task. 3. Understanding how these reps are built might allow new approaches for unsupervised or self-supervised training.