October 12, 2018

How browsers learned to support your system's favorite font

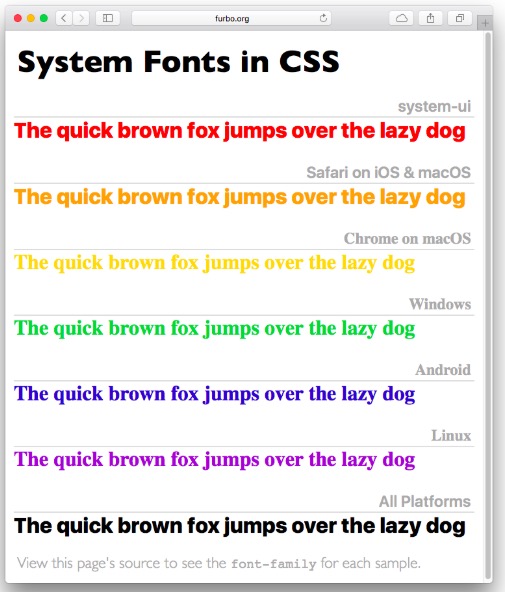

Operating systems play favorites when it comes to fonts: they pick one as their default. And the OS’s don’t agree with one another:

But now when you’re designing a page you can tell CSS to use the system font of whatever operating system the browser is running on. This is thanks to Craig Hockenberry who proposed the idea in an article three years ago. Apple picked up on it, and now it’s worked it’s way into the standard CSS font module and is supported by Chrome and Safari; Windows and Mozilla are lagging. Here’s Craig’s write-up of the process.

Here’s a quick test of whether it’s working in the browser you’re reading this post with:

This sentence should be in this blog’s designated font: Georgia. Or maybe one of its serif-y fall-backs.

This one should be in your operating system’s standard font, at least if you’re using Chrome or Safari at the moment.

We now return you to this blog’s regular font, already in progress.